![[ 4G Stormtrooper ]](https://perishablepress.com/wp/wp-content/images/2009/misc-chunks/stormtrooper-4g.jpg)

At last! After many months of collecting data, crafting directives, and testing results, I am thrilled to announce the release of the 4G Blacklist! The 4G Blacklist is a next-generation protective firewall that secures your site against a wide range of automated attacks and other malicious activity. Continue reading »

I really hate bad robots. When a web crawler, spider, bot — or whatever you want to call it — behaves in a way that is contrary to expected and/or accepted protocols, we say that the bot is acting suspiciously, behaving badly, or just acting stupid in general. Unfortunately, there are thousands — if not hundreds of thousands — of nefarious bots violating our sites every minute of the day. For the most part, there are effective methods available enabling […] Continue reading »

![[ Building the Hoover Dam, Part 1 ]](https://perishablepress.com/wp/wp-content/images/2009/building-4g/hoover-dam_01.jpg)

Last year, after much research and discussion, I built a concise, lightweight security strategy for Apache-powered websites. Prior to the development of this strategy, I relied on several extensive blacklists to protect my sites against malicious user agents and IP addresses. Unfortunately, these mega-lists eventually became unmanageable and ineffective. As increasing numbers of attacks hit my server, I began developing new techniques for defending against external threats. This work soon culminated in the release of a “next-generation” blacklist that works […] Continue reading »

Working a great deal with blacklists, I am frequently trying to isolate and identify problematic code. For example, a blacklist implementation may suddenly prevent a certain type of page from loading. In order to resolve the issue, the blacklist is immediately removed and tested for the offending directive(s). This situation is common to other coding languages as well, especially when dealing with CSS. Identifying problem code is more of an art form than a science, but fortunately, there are a […] Continue reading »

Ever wanted to provide automatic language translations of your web pages without installing another plugin? Here is a valid, SEO-friendly technique that takes advantage of Google’s free translation service. All you need is a PHP-enabled server and you’re good to go. Just copy and paste the following code into the desired location in your page template and enjoy the results. Once in place, this code will produce translation links for eight common languages for every page on your site. Grab, […] Continue reading »

I recently added OpenSearch functionality to Perishable Press. Now, OpenSearch-enabled browsers such as Firefox and IE 7 alert users with the option to customize their browser’s built-in search feature with an exclusive OpenSearch-powered search option for Perishable Press. The autodiscovery feature of supportive browsers detects the custom search protocol and enables users to easily add it to their collection of readily available site-specific search options. Now, users may search the entire Perishable Press domain with the click of a button. […] Continue reading »

There are two possibilities here: Yahoo!’s Slurp crawler is broken or Yahoo! lies about obeying Robots directives. Either case isn’t good. Slurp just can’t seem to keep its nose out of my private business. And, as I’ve discussed before, this happens all the time. Here are the two most recent offenses, as recorded in the log file for my blackhole spider trap: Continue reading »

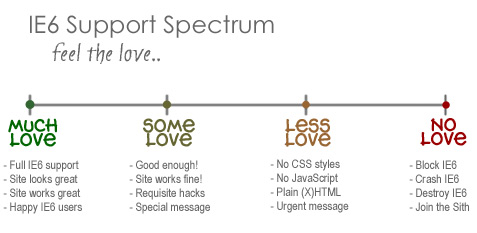

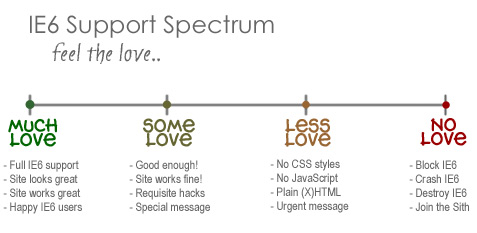

I know, I know, not another post about IE6! I actually typed this up a couple of weeks ago while immersed in my site redesign project. I had recently decided that I would no longer support that terrible browser, and this tangential post just kind of “fell out.” I wasn’t sure whether or not to post it, but I recently decided to purge my draft stash by posting everything for your reading pleasure. Thus, you may see a few turds […] Continue reading »

As announced at IE Death March, I recently dropped support for Internet Explorer 6. As newer versions of Firefox, Opera, and Safari (and others) continue to improve consistency and provide better support for standards-based techniques, having to carry IE 6 along for the ride — for any reason — is painful. Thanks to the techniques described in this article, I am free to completely ignore (figuratively and literally) IE 6 when developing and designing websites. Continue reading »

An ongoing series of articles on the fine art of malicious exploit detection and prevention. Learn about preventing the sneaky mischievous and deceptive practices of some of the worst spammers, scrapers, crackers, and other scumbags on the Internet. Continue reading »

Here at Perishable Press, I love to write about minimalism, simplicity, and usability in user-interface and web design. I have always enjoyed the minimalist aesthetic, as my Perishable Theme plainly illustrates. Fortunately, many designers and developers have embraced the minimalist concept, and continue to produce and promote minimalist design principles in their designs. As often as my schedule allows, I like to take the time to explore and share some of my favorite minimalist designs, and so far have managed […] Continue reading »

As my readers know, I spend a lot of time digging through error logs, preventing attacks, and reporting results. Occasionally, some moron will pull a stunt that deserves exposure, public humiliation, and banishment. There is certainly no lack of this type of nonsense, as many of you are well-aware. 3G Blacklist Even so, I have to admit that I am very happy with my latest strategy against crackers, spammers, and other scumbags, namely, the 3G Blacklist. Since implementing this effective […] Continue reading »

Hmmm.. Let’s see here. Google can do it. MSN/Live can do it. Even Ask can do it. So why oh why can’t Yahoo’s grubby Slurp crawler manage to adhere to robots.txt crawl directives? Just when I thought Yahoo! finally figured it out, I discover more Slurp tracks in my Blackhole trap for bad spiders: Continue reading »

This was just too juicy to pass up. Blogstorm recently blogged about an easy JavaScript technique for making any website editable. After checking it out for myself, I just had to share it here at Perishable Press. Here it is: javascript:document.body.contentEditable='true'; document.designMode='on'; void 0 Paste that single line of code into the address bar of any modern browser and have fun editing the page. Obviously, any changes will only apply to the page as seen in your browser, not the […] Continue reading »

![[ Count Chimpula ]](https://perishablepress.com/wp/wp-content/images/2008/misc-chunks/verify-feedcount.jpg)

Recently, I received a bizarre email accusing me of calling someone out on their fake Feedburner subscriber count. Apparently, some desperate blogger had been claiming to have something like 30,000 Feedburner subscribers when in reality they only had around 700. From what I could tell, the fraudulent site was displaying a counterfeit Feedburner subscriber-count badge using some fancy CSS image-replacement or something. Whatever. I really could care less, but the information contained in the email got me thinking: Providing an […] Continue reading »

![[ Thumbnail: Google W3C Invalidation ]](https://perishablepress.com/wp/wp-content/images/2008/google-check/google-invalidation_mid.gif)

Consider the Google home page — arguably the most popular, highly visited web page in the entire world. Such a simple page, right? You would think that such a simple design would fully embrace Web Standards. I mean, think about it for a moment.. How would you or I throw down a few lists, a search field, and a logo image? Something like this, maybe: Continue reading »

![[ 4G Stormtrooper ]](https://perishablepress.com/wp/wp-content/images/2009/misc-chunks/stormtrooper-4g.jpg)

![[ Building the Hoover Dam, Part 1 ]](https://perishablepress.com/wp/wp-content/images/2009/building-4g/hoover-dam_01.jpg)

![[ Count Chimpula ]](https://perishablepress.com/wp/wp-content/images/2008/misc-chunks/verify-feedcount.jpg)

![[ Thumbnail: Google W3C Invalidation ]](https://perishablepress.com/wp/wp-content/images/2008/google-check/google-invalidation_mid.gif)