![[ Screenshot: The Legion of Doom ]](https://perishablepress.com/wp/wp-content/images/2009/misc-chunks/multiple-addresses.jpg)

Let’s face it. There’s just as much scum on the Internet as there is out there in the “real world.” Maybe even more, who knows. From scammers and spammers to scrapers and crackers, the Web is just crawling with all sorts of pathetic scumbags. As predictably random as much of the malicious activity happens to be, it is virtually guaranteed that you will be hounded by at least a few persistent IP addresses that, for whatever reason, have latched on […] Continue reading »

Normally, when visitors post a comment to your site, specific types of client data are associated with the request. Commonly, a client will provide a user agent, a referrer, and a host header. When any of these variables is absent, there is good reason to suspect foul play. For example, virtually all browsers provide some sort of user-agent name to identify themselves. Conversely, malicious scripts directly posting spam and other payloads to your site frequently operate without specifying a user […] Continue reading »

When designing sites, it is often useful to identify different pages by adding an ID attribute to the <body></body> element. Commonly, the name of the page is used as the attribute value, for example: <body id="about"></body> In this case, “about” would be the body ID for the “About” page, which would be named something like “about.php”. Likewise, other pages would have unique IDs as well, for example: <body id="archive"> </body><body id="contact"> </body><body id="subscribe"> </body><body id="portfolio"></body> ..again, with each ID associated […] Continue reading »

Just like last year, this Spring I have been taking some time to do some general maintenance here at Perishable Press. This includes everything from fixing broken links and resolving errors to optimizing scripts and eliminating unnecessary plugins. I’ll admit, this type of work is often quite dull, however I always enjoy the process of cleaning up my HTAccess files. In this post, I share some of the changes made to my HTAccess files and explain the reasoning behind each […] Continue reading »

![Error establishing a database connection [ Screenshot: WP Default Database Error Page ]](https://perishablepress.com/wp/wp-content/images/2009/install-security/h4x0rd_03.gif)

The other day, my server crashed and Perishable Press was unable to connect to the MySQL database. Normally, when WordPress encounters a database error, it delivers a specific error message similar to the following: Continue reading »

Given my propensity to discuss matters involving error log data (e.g., monitoring malicious behavior, setting up error logs, and creating extensive blacklists), I am often asked about the best way to go about monitoring 404 and other types of server errors. While I consider myself to be a novice in this arena (there are far brighter people with much greater experience), I do spend a lot of time digging through log entries and analyzing data. So, when asked recently about […] Continue reading »

You have seen user-agent blacklists, IP blacklists, 4G Blacklists, and everything in between. Now, in this article, for your sheer and utter amusement, I present a collection of over 8000 blacklisted referrers. Shortcut: skip the article and jump to Disclaimer and Download » Referrer Spam Sucks For the uninitiated, in teh language of teh Web, a referrer is the online resource from whence a visitor happened to arrive at your site. For example, if Johnny the Wonder Parrot was visiting the […] Continue reading »

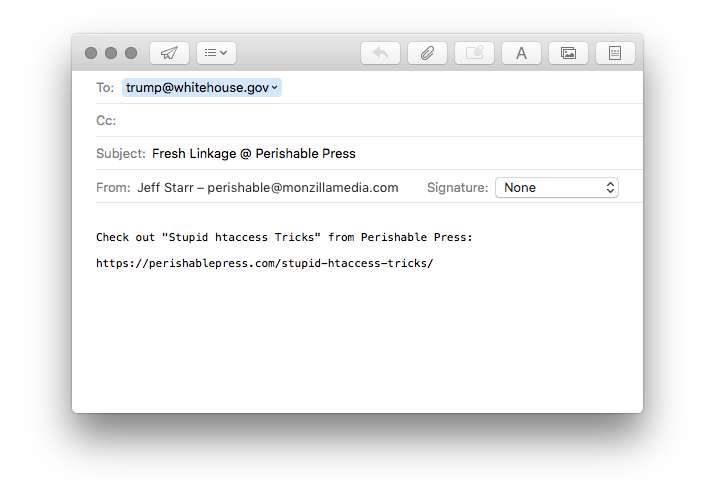

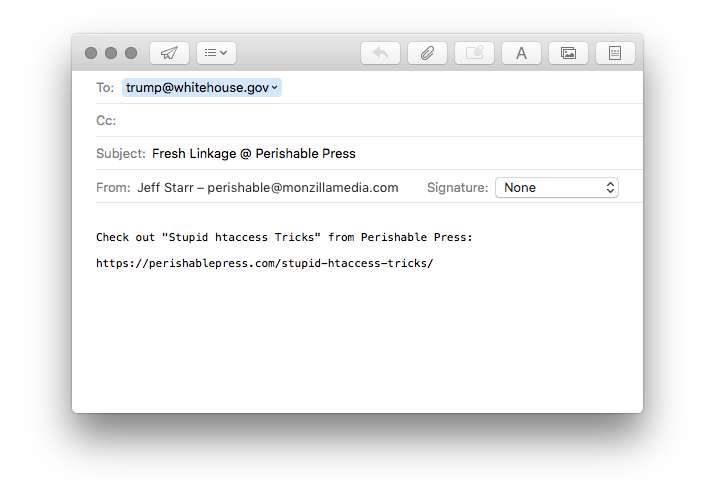

In addition to your choice collection of “Share This” links, you may also want to provide visitors with a link that enables them to quickly and easily send the URL permalink of any post to their friends via email. This is a great way to increase your readership and further your influence. Just copy & paste the following code into the desired location in your page template: <a href="mailto:?subject=Fresh%20Linkage%20@%20Perishable%20Press&body=Check%20out%20<?php the_permalink(); ?>%20from%20Perishable%20Press" title="Send a link to this post via email" rel="nofollow">Share […] Continue reading »

![[ Image: Inverted Eclipse ]](https://perishablepress.com/wp/wp-content/images/2009/agent-blacklist/inverted-eclipse.jpg)

As discussed in my recent article, Eight Ways to Blacklist with Apache’s mod_rewrite, one method of stopping spammers, scrapers, email harvesters, and malicious bots is to blacklist their associated user agents. Apache enables us to target bad user agents by testing the user-agent string against a predefined blacklist of unwanted visitors. Any bot identifying itself as one of the blacklisted agents is immediately and quietly denied access. While this certainly isn’t the most effective method of securing your site against […] Continue reading »

Check out this sweet composition of aural styles discovered in the stylesheet for the W3C’s website: /* AURAL STYLES (via W3C) */ @media aural { h1, h2, h3, h4, h5, h6 { voice-family: paul, male; stress: 20; richness: 90 } h1 { pitch: x-low; pitch-range: 90 } h2 { pitch: x-low; pitch-range: 80 } h3 { pitch: low; pitch-range: 70 } h4 { pitch: medium; pitch-range: 60 } h5 { pitch: medium; pitch-range: 50 } h6 { pitch: medium; pitch-range: […] Continue reading »

![[ 4G Stormtrooper ]](https://perishablepress.com/wp/wp-content/images/2009/misc-chunks/stormtrooper-4g.jpg)

At last! After many months of collecting data, crafting directives, and testing results, I am thrilled to announce the release of the 4G Blacklist! The 4G Blacklist is a next-generation protective firewall that secures your site against a wide range of automated attacks and other malicious activity. Continue reading »

![[ Building the Hoover Dam, Part 1 ]](https://perishablepress.com/wp/wp-content/images/2009/building-4g/hoover-dam_01.jpg)

Last year, after much research and discussion, I built a concise, lightweight security strategy for Apache-powered websites. Prior to the development of this strategy, I relied on several extensive blacklists to protect my sites against malicious user agents and IP addresses. Unfortunately, these mega-lists eventually became unmanageable and ineffective. As increasing numbers of attacks hit my server, I began developing new techniques for defending against external threats. This work soon culminated in the release of a “next-generation” blacklist that works […] Continue reading »

In my recent article on blocking proxy servers, I explain how to use HTAccess to deny site access to a wide range of proxy servers. The method works great, but some readers want to know how to allow access for specific proxy servers while denying access to as many other proxies as possible. Fortunately, the solution is as simple as adding a few lines to my original proxy-blocking method. Specifically, we may allow any requests coming from our whitelist of […] Continue reading »

One of the oldest JavaScript tricks in the book involves providing a “print this!” link for visitors that enables them to summon their operating system’s default print dialogue box to facilitate quick and easy printing of whatever page they happen to be viewing. With the old way of pulling this little stunt, we write this in the markup comprising the target “print this!” link in question: Continue reading »

Shortest post ever! You can quickly and easily apply transparency to any supportive element by adding the following CSS code your stylesheet: selector { filter: alpha(opacity=50); /* internet explorer */ -khtml-opacity: 0.5; /* khtml, old safari */ -moz-opacity: 0.5; /* mozilla, netscape */ opacity: 0.5; /* fx, safari, opera */ } Check the code comments to see what’s doing what, and feel free to adjust the level of transparency by editing the various property values. Also, remember to replace “selector” […] Continue reading »

With the recent Feedburner service outage, many sites across the Web experienced severe drops in their Feedburner subscriber counts. Apparently, Google is requiring all Feedburner accounts to be transferred over to Google by the end of February. In the midst of this mass migration, chaotic subscriber data has been reported to include everything from dramatic count drops and fluctuating reach statistics to zero-count values and dreaded “N/A” subscriber-count errors. Obviously, displaying erroneous subscriber-count data on your site is not a […] Continue reading »

![[ Screenshot: The Legion of Doom ]](https://perishablepress.com/wp/wp-content/images/2009/misc-chunks/multiple-addresses.jpg)

![Error establishing a database connection [ Screenshot: WP Default Database Error Page ]](https://perishablepress.com/wp/wp-content/images/2009/install-security/h4x0rd_03.gif)

![[ Image: Inverted Eclipse ]](https://perishablepress.com/wp/wp-content/images/2009/agent-blacklist/inverted-eclipse.jpg)

![[ 4G Stormtrooper ]](https://perishablepress.com/wp/wp-content/images/2009/misc-chunks/stormtrooper-4g.jpg)

![[ Building the Hoover Dam, Part 1 ]](https://perishablepress.com/wp/wp-content/images/2009/building-4g/hoover-dam_01.jpg)