![[ Firefox ]](https://perishablepress.com/wp/wp-content/images/2009/misc-chunks/firefox-logo.png)

In a perfect world, I don’t use CSS hacks, and certainly don’t recommend them. In the unpredictable, chaos of the real world, however, there are many situations where applying styles to particular browsers is indeed the optimal solution. Most of the time, I am targeting or filtering Internet Explorer (because it is so incredibly awesome), but occasionally I need to tweak something in a modern browser like Firefox, Safari, or Opera. In this article, we’ll look at CSS hacks targeting […] Continue reading »

Given my propensity to discuss matters involving error log data (e.g., monitoring malicious behavior, setting up error logs, and creating extensive blacklists), I am often asked about the best way to go about monitoring 404 and other types of server errors. While I consider myself to be a novice in this arena (there are far brighter people with much greater experience), I do spend a lot of time digging through log entries and analyzing data. So, when asked recently about […] Continue reading »

You have seen user-agent blacklists, IP blacklists, 4G Blacklists, and everything in between. Now, in this article, for your sheer and utter amusement, I present a collection of over 8000 blacklisted referrers. Shortcut: skip the article and jump to Disclaimer and Download » Referrer Spam Sucks For the uninitiated, in teh language of teh Web, a referrer is the online resource from whence a visitor happened to arrive at your site. For example, if Johnny the Wonder Parrot was visiting the […] Continue reading »

![[ Image: Inverted Eclipse ]](https://perishablepress.com/wp/wp-content/images/2009/agent-blacklist/inverted-eclipse.jpg)

As discussed in my recent article, Eight Ways to Blacklist with Apache’s mod_rewrite, one method of stopping spammers, scrapers, email harvesters, and malicious bots is to blacklist their associated user agents. Apache enables us to target bad user agents by testing the user-agent string against a predefined blacklist of unwanted visitors. Any bot identifying itself as one of the blacklisted agents is immediately and quietly denied access. While this certainly isn’t the most effective method of securing your site against […] Continue reading »

![[ 4G Stormtrooper ]](https://perishablepress.com/wp/wp-content/images/2009/misc-chunks/stormtrooper-4g.jpg)

At last! After many months of collecting data, crafting directives, and testing results, I am thrilled to announce the release of the 4G Blacklist! The 4G Blacklist is a next-generation protective firewall that secures your site against a wide range of automated attacks and other malicious activity. Continue reading »

I really hate bad robots. When a web crawler, spider, bot — or whatever you want to call it — behaves in a way that is contrary to expected and/or accepted protocols, we say that the bot is acting suspiciously, behaving badly, or just acting stupid in general. Unfortunately, there are thousands — if not hundreds of thousands — of nefarious bots violating our sites every minute of the day. For the most part, there are effective methods available enabling […] Continue reading »

![[ Building the Hoover Dam, Part 1 ]](https://perishablepress.com/wp/wp-content/images/2009/building-4g/hoover-dam_01.jpg)

Last year, after much research and discussion, I built a concise, lightweight security strategy for Apache-powered websites. Prior to the development of this strategy, I relied on several extensive blacklists to protect my sites against malicious user agents and IP addresses. Unfortunately, these mega-lists eventually became unmanageable and ineffective. As increasing numbers of attacks hit my server, I began developing new techniques for defending against external threats. This work soon culminated in the release of a “next-generation” blacklist that works […] Continue reading »

![Hypothetical archive example showing posts listed in three category-specific columns [ Screenshot: Triple Archive Columns ]](https://perishablepress.com/wp/wp-content/images/2009/vertical-loops/triple-column.gif)

Here at Perishable Press, the number of posts listed in my archives is rapidly approaching the 700 mark. While this is good news in general, displaying such a large number of posts in an effective, user-friendly fashion continues to prove challenging. Unfortunately, my current strategy of simply dumping all posts into an unordered list just isn’t working. I think it’s fair to say that archive lists containing more than like 50 or 100 post titles are effectively useless and nothing […] Continue reading »

Working a great deal with blacklists, I am frequently trying to isolate and identify problematic code. For example, a blacklist implementation may suddenly prevent a certain type of page from loading. In order to resolve the issue, the blacklist is immediately removed and tested for the offending directive(s). This situation is common to other coding languages as well, especially when dealing with CSS. Identifying problem code is more of an art form than a science, but fortunately, there are a […] Continue reading »

Ever wanted to provide automatic language translations of your web pages without installing another plugin? Here is a valid, SEO-friendly technique that takes advantage of Google’s free translation service. All you need is a PHP-enabled server and you’re good to go. Just copy and paste the following code into the desired location in your page template and enjoy the results. Once in place, this code will produce translation links for eight common languages for every page on your site. Grab, […] Continue reading »

I recently added OpenSearch functionality to Perishable Press. Now, OpenSearch-enabled browsers such as Firefox and IE 7 alert users with the option to customize their browser’s built-in search feature with an exclusive OpenSearch-powered search option for Perishable Press. The autodiscovery feature of supportive browsers detects the custom search protocol and enables users to easily add it to their collection of readily available site-specific search options. Now, users may search the entire Perishable Press domain with the click of a button. […] Continue reading »

A great way to enhance the visual appearance of various block-level elements is to use a “rounded-corner” effect. For example, throughout the current design for this site, I am using rounded corners on several different types of elements, including image borders, content panels, and even pre-formatted code blocks. Some of these rounded-corner effects are accomplished via multiple <div></div>s and a few background images, while others are created strictly with CSS. Of these two different methods, extra images and markup are […] Continue reading »

There are two possibilities here: Yahoo!’s Slurp crawler is broken or Yahoo! lies about obeying Robots directives. Either case isn’t good. Slurp just can’t seem to keep its nose out of my private business. And, as I’ve discussed before, this happens all the time. Here are the two most recent offenses, as recorded in the log file for my blackhole spider trap: Continue reading »

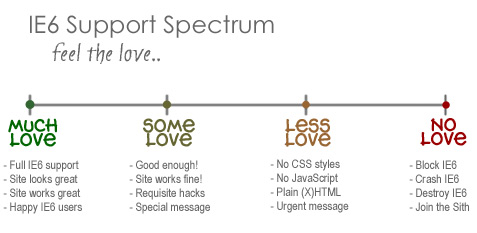

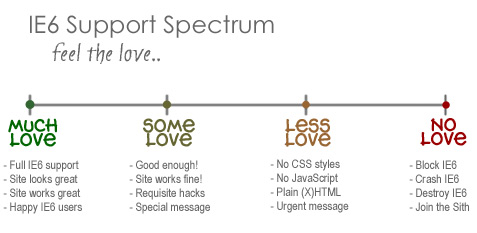

I know, I know, not another post about IE6! I actually typed this up a couple of weeks ago while immersed in my site redesign project. I had recently decided that I would no longer support that terrible browser, and this tangential post just kind of “fell out.” I wasn’t sure whether or not to post it, but I recently decided to purge my draft stash by posting everything for your reading pleasure. Thus, you may see a few turds […] Continue reading »

As announced at IE Death March, I recently dropped support for Internet Explorer 6. As newer versions of Firefox, Opera, and Safari (and others) continue to improve consistency and provide better support for standards-based techniques, having to carry IE 6 along for the ride — for any reason — is painful. Thanks to the techniques described in this article, I am free to completely ignore (figuratively and literally) IE 6 when developing and designing websites. Continue reading »

An ongoing series of articles on the fine art of malicious exploit detection and prevention. Learn about preventing the sneaky mischievous and deceptive practices of some of the worst spammers, scrapers, crackers, and other scumbags on the Internet. Continue reading »

![[ Firefox ]](https://perishablepress.com/wp/wp-content/images/2009/misc-chunks/firefox-logo.png)

![[ Image: Inverted Eclipse ]](https://perishablepress.com/wp/wp-content/images/2009/agent-blacklist/inverted-eclipse.jpg)

![[ 4G Stormtrooper ]](https://perishablepress.com/wp/wp-content/images/2009/misc-chunks/stormtrooper-4g.jpg)

![[ Building the Hoover Dam, Part 1 ]](https://perishablepress.com/wp/wp-content/images/2009/building-4g/hoover-dam_01.jpg)

![Hypothetical archive example showing posts listed in three category-specific columns [ Screenshot: Triple Archive Columns ]](https://perishablepress.com/wp/wp-content/images/2009/vertical-loops/triple-column.gif)