Time for another Feedburner redirect tutorial! In our previous FeedBurner-redirect post, I provide an improved HTAccess method for redirecting your site’s main feed and comment feed to their respective Feedburner URLs. In this tutorial, we are redirecting individual WordPress category feeds to their respective FeedBurner URLs. We will also look at the complete code required to redirect all of the above: the main feed, comments feed, and of course any number of individual category feeds. Let’s jump into it.. Continue reading »

There are two possibilities here: Yahoo!’s Slurp crawler is broken or Yahoo! lies about obeying Robots directives. Either case isn’t good. Slurp just can’t seem to keep its nose out of my private business. And, as I’ve discussed before, this happens all the time. Here are the two most recent offenses, as recorded in the log file for my blackhole spider trap: Continue reading »

Recently, a client wanted to deliver her blog feed through Feedburner to take advantage of its excellent statistical features. Establishing a Feedburner-delivered feed requires three things: a valid feed URL, a Feedburner account, and a redirect method. For permalink-enabled WordPress feed URLs, configuring the required redirect is straightforward: either install the Feedburner Feedsmith plugin or use an optimized HTAccess technique. Unfortunately, for sites without permalinks enabled, the Feedsmith plugin is effectively useless, and virtually all of the HTAccess methods currently […] Continue reading »

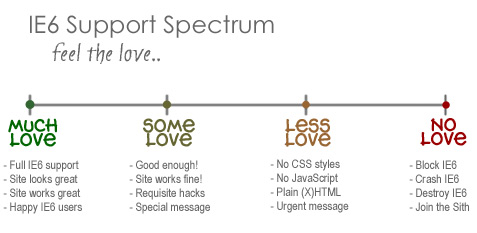

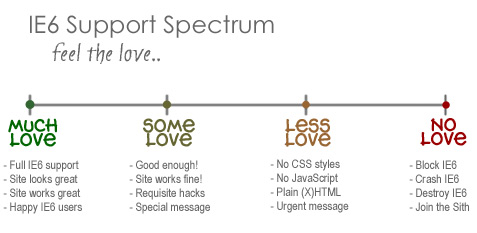

I know, I know, not another post about IE6! I actually typed this up a couple of weeks ago while immersed in my site redesign project. I had recently decided that I would no longer support that terrible browser, and this tangential post just kind of “fell out.” I wasn’t sure whether or not to post it, but I recently decided to purge my draft stash by posting everything for your reading pleasure. Thus, you may see a few turds […] Continue reading »

An ongoing series of articles on the fine art of malicious exploit detection and prevention. Learn about preventing the sneaky mischievous and deceptive practices of some of the worst spammers, scrapers, crackers, and other scumbags on the Internet. Continue reading »

As my readers know, I spend a lot of time digging through error logs, preventing attacks, and reporting results. Occasionally, some moron will pull a stunt that deserves exposure, public humiliation, and banishment. There is certainly no lack of this type of nonsense, as many of you are well-aware. 3G Blacklist Even so, I have to admit that I am very happy with my latest strategy against crackers, spammers, and other scumbags, namely, the 3G Blacklist. Since implementing this effective […] Continue reading »

Hmmm.. Let’s see here. Google can do it. MSN/Live can do it. Even Ask can do it. So why oh why can’t Yahoo’s grubby Slurp crawler manage to adhere to robots.txt crawl directives? Just when I thought Yahoo! finally figured it out, I discover more Slurp tracks in my Blackhole trap for bad spiders: Continue reading »

I need your help! I am losing my mind trying to solve another baffling mystery. For the past three or four months, I have been recording many 404 Errors generated from msnbot, Yahoo-Slurp, and other spider crawls. These errors result from invalid requests for URLs containing query strings such as the following: https://example.com/press/page/2/?tag=spam https://example.com/press/page/3/?tag=code https://example.com/press/page/2/?tag=email https://example.com/press/page/2/?tag=xhtml https://example.com/press/page/4/?tag=notes https://example.com/press/page/2/?tag=flash https://example.com/press/page/2/?tag=links https://example.com/press/page/3/?tag=theme https://example.com/press/page/2/?tag=press Note: For these example URLs, I replaced my domain, perishablepress.com with the generic example.com. Turns out that listing the plain-text […] Continue reading »

![[ 3G Stormtrooper ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_00.jpg)

In the now-complete series, Building the 3G Blacklist, I share insights and discoveries concerning website security and protection against malicious attacks. Each article in the series focuses on unique blacklist strategies designed to protect sites transparently, effectively, and efficiently. The five articles culminate in the release of the next generation 3G Blacklist. Here is a quick summary of the entire Building the 3G Blacklist series: Continue reading »

![[ 3G Stormtroopers ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-release.jpg)

After much research and discussion, I have developed a concise, lightweight security strategy for Apache-powered websites. Prior to the development of this strategy, I relied on several extensive blacklists to protect my sites against malicious user agents and IP addresses. Over time, these mega-lists became unmanageable and ineffective. As increasing numbers of attacks hit my server, I began developing new techniques for defending against external threats. This work soon culminated in the release of a “next-generation” blacklist that works by […] Continue reading »

![[ 3G Stormtroopers (Red Version) ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_05.jpg)

In this continuing five-article series, I share insights and discoveries concerning website security and protecting against malicious attacks. Wrapping up the series with this article, I provide the final key to our comprehensive blacklist strategy: selectively blocking individual IPs. Previous articles also focus on key blacklist strategies designed to protect your site transparently, effectively, and efficiently. In the next article, these five articles will culminate in the release of the next generation 3G Blacklist. Continue reading »

![[ 3G Stormtroopers (Team Aqua) ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_04.jpg)

In this continuing five-article series, I share insights and discoveries concerning website security and protecting against malicious attacks. In this fourth article, I build upon previous ideas and techniques by improving the directives contained in the original 2G Blacklist. Subsequent articles will focus on key blacklist strategies designed to protect your site transparently, effectively, and efficiently. At the conclusion of the series, the five articles will culminate in the release of the next generation 3G Blacklist. Continue reading »

![[ 3G Stormtroopers (Deep Purple) ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_03.jpg)

In this continuing five-article series, I share insights and discoveries concerning website security and protecting against malicious attacks. In this third article, I discuss targeted, user-agent blacklisting and present an alternate approach to preventing site access for the most prevalent and malicious user agents. Subsequent articles will focus on key blacklist strategies designed to protect your site transparently, effectively, and efficiently. At the conclusion of the series, the five articles will culminate in the release of the next generation 3G […] Continue reading »

![[ 3G Stormtroopers (Green Machine) ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_02.jpg)

In this continuing five-article series, I share insights and discoveries concerning website security and protecting against malicious attacks. In this second article, I present an incredibly powerful method for eliminating malicious query string exploits. Subsequent articles will focus on key blacklist strategies designed to protect your site transparently, effectively, and efficiently. At the conclusion of the series, the five articles will culminate in the release of the next generation 3G Blacklist. Improving Security by Preventing Query String Exploits A vast […] Continue reading »

![[ 3G Stormtroopers (Blue Dream) ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_01.jpg)

In this series of five articles, I share insights and discoveries concerning website security and protecting against malicious attacks. In this first article of the series, I examine the process of identifying attack trends and using them to immunize against future attacks. Subsequent articles will focus on key blacklist strategies designed to protect your site transparently, effectively, and efficiently. At the conclusion of the series, the five articles will culminate in the release of the next generation 3G Blacklist. Improving […] Continue reading »

In a previous article, I explain how to redirect your WordPress feeds to Feedburner. Redirecting your WordPress feeds to Feedburner enables you to take advantage of their many freely provided, highly useful tracking and statistical services. Although there are a few important things to consider before optimizing your feeds and switching to Feedburner, many WordPress users redirect their blog’s two main feeds — “main content” and “all comments” — using either a plugin or directly via htaccess. Here is the […] Continue reading »

![[ 3G Stormtrooper ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_00.jpg)

![[ 3G Stormtroopers ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-release.jpg)

![[ 3G Stormtroopers (Red Version) ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_05.jpg)

![[ 3G Stormtroopers (Team Aqua) ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_04.jpg)

![[ 3G Stormtroopers (Deep Purple) ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_03.jpg)

![[ 3G Stormtroopers (Green Machine) ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_02.jpg)

![[ 3G Stormtroopers (Blue Dream) ]](https://perishablepress.com/wp/wp-content/images/2008/blacklist-3g/blacklist-series_01.jpg)